Download AWS Certified Machine Learning - Specialty.MLS-C01.Pass4Success.2026-02-05.125q.vcex

| Vendor: | Amazon |

| Exam Code: | MLS-C01 |

| Exam Name: | AWS Certified Machine Learning - Specialty |

| Date: | Feb 05, 2026 |

| File Size: | 885 KB |

How to open VCEX files?

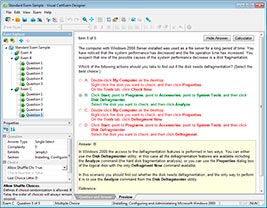

Files with VCEX extension can be opened by ProfExam Simulator.

Discount: 20%

Demo Questions

Question 1

[Data Engineering]

Acybersecurity company is collecting on-premises server logs, mobile app logs, and loT sensor data. The company backs up the ingested data in an Amazon S3 bucket and sends the ingested data to Amazon OpenSearch Service for further analysis. Currently, the company has a custom ingestion pipeline that is running on Amazon EC2 instances. The company needs to implement a new serverless ingestion pipeline that can automatically scale to handle sudden changes in the data flow.

Which solution will meet these requirements MOST cost-effectively?

- Create one Amazon Kinesis data stream. Create one Amazon Data Firehose delivery stream to send data to OpenSearch Service. Configure the delivery stream to back up the data to the S3 bucket. Connect the delivery stream to the data stream. Configure the data sources to send data to the data stream.

- Create two Amazon Data Firehose delivery streams to send data to the S3 bucket and OpenSearch Service. Configure the data sources to send data to the delivery streams.

- Create one Amazon Data Firehose delivery stream to send data to OpenSearch Service. Configure the delivery stream to back up the raw data to the S3 bucket. Configure the data sources to send data to the delivery stream.

- Create one Amazon Kinesis data stream. Create two Amazon Data Firehose delivery streams to send data to the S3 bucket and OpenSearch Service. Connect the delivery streams to the data stream. Configure the data sources to send data to the data stream.

Correct answer: B

Question 2

[Modeling]

An insurance company developed a new experimental machine learning (ML) model to replace an existing model that is in production. The company must validate the quality of predictions from the new experimental model in a production environment before the company uses the new experimental model to serve general user requests.

Which one model can serve user requests at a time. The company must measure the performance of the new experimental model without affecting the current live traffic

Which solution will meet these requirements?

- A/B testing

- Canary release

- Shadow deployment

- Blue/green deployment

Correct answer: C

Question 3

[Modeling]

A large mobile network operating company is building a machine learning model to predict customers who are likely to unsubscribe from the service. The company plans to offer an incentive for these customers as the cost of churn is far greater than the cost of the incentive.

The model produces the following confusion matrix after evaluating on a test dataset of 100 customers:

Based on the model evaluation results, why is this a viable model for production?

- The model is 86% accurate and the cost incurred by the company as a result of false negatives is less than the false positives.

- The precision of the model is 86%, which is less than the accuracy of the model.

- The model is 86% accurate and the cost incurred by the company as a result of false positives is less than the false negatives.

- The precision of the model is 86%, which is greater than the accuracy of the model.

Correct answer: C

Question 4

[Exploratory Data Analysis]

A Machine Learning Specialist is deciding between building a naive Bayesian model or a full Bayesian network for a classification problem. The Specialist computes the Pearson correlation coefficients between each feature and finds that their absolute values range between 0.1 to 0.95.

Which model describes the underlying data in this situation?

- A naive Bayesian model, since the features are all conditionally independent.

- A full Bayesian network, since the features are all conditionally independent.

- A naive Bayesian model, since some of the features are statistically dependent.

- A full Bayesian network, since some of the features are statistically dependent.

Correct answer: D

Question 5

[Data Engineering]

A Machine Learning Specialist is preparing data for training on Amazon SageMaker The Specialist is transformed into a numpy .array, which appears to be negatively affecting the speed of the training

What should the Specialist do to optimize the data for training on SageMaker'?

- Use the SageMaker batch transform feature to transform the training data into a DataFrame

- Use AWS Glue to compress the data into the Apache Parquet format

- Transform the dataset into the Recordio protobuf format

- Use the SageMaker hyperparameter optimization feature to automatically optimize the data

Correct answer: C

Question 6

[Exploratory Data Analysis]

A company wants to forecast the daily price of newly launched products based on 3 years of data for older product prices, sales, and rebates. The time-series data has irregular timestamps and is missing some values.

Data scientist must build a dataset to replace the missing values. The data scientist needs a solution that resamptes the data daily and exports the data for further modeling.

Which solution will meet these requirements with the LEAST implementation effort?

- Use Amazon EMR Serveriess with PySpark.

- Use AWS Glue DataBrew.

- Use Amazon SageMaker Studio Data Wrangler.

- Use Amazon SageMaker Studio Notebook with Pandas.

Correct answer: C

Question 7

[Modeling]

When submitting Amazon SageMaker training jobs using one of the built-in algorithms, which common parameters MUST be specified? (Select THREE.)

- The training channel identifying the location of training data on an Amazon S3 bucket.

- The validation channel identifying the location of validation data on an Amazon S3 bucket.

- The 1AM role that Amazon SageMaker can assume to perform tasks on behalf of the users.

- Hyperparameters in a JSON array as documented for the algorithm used.

- The Amazon EC2 instance class specifying whether training will be run using CPU or GPU.

- The output path specifying where on an Amazon S3 bucket the trained model will persist.

Correct answer: A, C, F

Question 8

[Modeling]

A company sells thousands of products on a public website and wants to automatically identify products with potential durability problems. The company has 1.000 reviews with date, star rating, review text, review summary, and customer email fields, but many reviews are incomplete and have empty fields. Each review has already been labeled with the correct durability result.

A machine learning specialist must train a model to identify reviews expressing concerns over product durability. The first model needs to be trained and ready to review in 2 days.

What is the MOST direct approach to solve this problem within 2 days?

- Train a custom classifier by using Amazon Comprehend.

- Build a recurrent neural network (RNN) in Amazon SageMaker by using Gluon and Apache MXNet.

- Train a built-in BlazingText model using Word2Vec mode in Amazon SageMaker.

- Use a built-in seq2seq model in Amazon SageMaker.

Correct answer: A

Question 9

[Modeling]

A Data Scientist is building a model to predict customer churn using a dataset of 100 continuous numerical features. The Marketing team has not provided any insight about which features are relevant for churn prediction. The Marketing team wants to interpret the model and see the direct impact of relevant features on the model outcome. While training a logistic regression model, the Data Scientist observes that there is a wide gap between the training and validation set accuracy.

Which methods can the Data Scientist use to improve the model performance and satisfy the Marketing team's needs? (Choose two.)

- Add L1 regularization to the classifier

- Add features to the dataset

- Perform recursive feature elimination

- Perform t-distributed stochastic neighbor embedding (t-SNE)

- Perform linear discriminant analysis

Correct answer: A, C

Question 10

[Modeling]

A company wants to enhance audits for its machine learning (ML) systems. The auditing system must be able to perform metadata analysis on the features that the ML models use. The audit solution must generate a report that analyzes the metadata. The solution also must be able to set the data sensitivity and authorship of features.

Which solution will meet these requirements with the LEAST development effort?

- Use Amazon SageMaker Feature Store to select the features. Create a data flow to perform feature-level metadata analysis. Create an Amazon DynamoDB table to store feature-level metadata. Use Amazon QuickSight to analyze the metadata.

- Use Amazon SageMaker Feature Store to set feature groups for the current features that the ML models use. Assign the required metadata for each feature. Use SageMaker Studio to analyze the metadata.

- Use Amazon SageMaker Features Store to apply custom algorithms to analyze the feature-level metadata that the company requires. Create an Amazon DynamoDB table to store feature-level metadata. Use Amazon QuickSight to analyze the metadata.

- Use Amazon SageMaker Feature Store to set feature groups for the current features that the ML models use. Assign the required metadata for each feature. Use Amazon QuickSight to analyze the metadata.

Correct answer: D

Question 11

Each morning, a data scientist at a rental car company creates insights about the previous day's rental car reservation demands. The company needs to automate this process by streaming the data to Amazon S3 in near real time. The solution must detect high-demand rental cars at each of the company's locations. The solution also must create a visualization dashboard that automatically refreshes with the most recent data.

Which solution will meet these requirements with the LEAST development time?

- Use Amazon Kinesis Data Firehose to stream the reservation data directly to Amazon S3. Detect high-demand outliers by using Amazon QuickSight ML Insights. Visualize the data in QuickSight.

- Use Amazon Kinesis Data Streams to stream the reservation data directly to Amazon S3. Detect high-demand outliers by using the Random Cut Forest (RCF) trained model in Amazon SageMaker. Visualize the data in Amazon QuickSight.

- Use Amazon Kinesis Data Firehose to stream the reservation data directly to Amazon S3. Detect high-demand outliers by using the Random Cut Forest (RCF) trained model in Amazon SageMaker. Visualize the data in Amazon QuickSight.

- Use Amazon Kinesis Data Streams to stream the reservation data directly to Amazon S3. Detect high-demand outliers by using Amazon QuickSight ML Insights. Visualize the data in QuickSight.

Correct answer: A

Explanation:

The solution that will meet the requirements with the least development time is to use Amazon Kinesis Data Firehose to stream the reservation data directly to Amazon S3, detect high-demand outliers by using Amazon QuickSight ML Insights, and visualize the data in QuickSight. This solution does not require any custom development or ML domain expertise, as it leverages the built-in features of QuickSight ML Insights to automatically run anomaly detection and generate insights on the streaming data. QuickSight ML Insights can also create a visualization dashboard that automatically refreshes with the most recent data, and allows the data scientist to explore the outliers and their key drivers.References:1: Simplify and automate anomaly detection in streaming data with Amazon Lookout for Metrics | AWS Machine Learning Blog2: Detecting outliers with ML-powered anomaly detection - Amazon QuickSight3: Real-time Outlier Detection Over Streaming Data - IEEE Xplore4: Towards a deep learning-based outlier detection ... - Journal of Big Data The solution that will meet the requirements with the least development time is to use Amazon Kinesis Data Firehose to stream the reservation data directly to Amazon S3, detect high-demand outliers by using Amazon QuickSight ML Insights, and visualize the data in QuickSight. This solution does not require any custom development or ML domain expertise, as it leverages the built-in features of QuickSight ML Insights to automatically run anomaly detection and generate insights on the streaming data. QuickSight ML Insights can also create a visualization dashboard that automatically refreshes with the most recent data, and allows the data scientist to explore the outliers and their key drivers.References:

1: Simplify and automate anomaly detection in streaming data with Amazon Lookout for Metrics | AWS Machine Learning Blog

2: Detecting outliers with ML-powered anomaly detection - Amazon QuickSight

3: Real-time Outlier Detection Over Streaming Data - IEEE Xplore

4: Towards a deep learning-based outlier detection ... - Journal of Big Data

HOW TO OPEN VCE FILES

Use VCE Exam Simulator to open VCE files

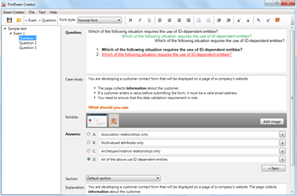

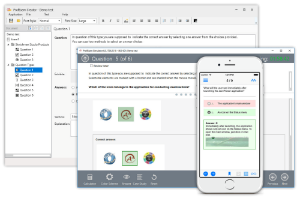

HOW TO OPEN VCEX AND EXAM FILES

Use ProfExam Simulator to open VCEX and EXAM files

ProfExam at a 20% markdown

You have the opportunity to purchase ProfExam at a 20% reduced price

Get Now!